Why Running LLM Tools Locally Is the Future of AI

Large Language Models (LLMs) have become game-changers in the world of artificial intelligence, but most people access them through cloud-based services like ChatGPT or APIs from OpenAI and others. What if you could run these powerful models directly on your own computer — without relying on the cloud? That’s exactly what local LLM tools let you do, and the benefits are game-changing.

When you choose to run LLMs locally, you unlock a new level of independence, privacy, and control. Here’s why this approach is gaining traction:

- Unmatched Privacy: Since everything happens on your device, your data never leaves your hands. There’s no external server processing your information, eliminating third-party involvement.

- Complete Customisation: You can tailor models to your specific needs, adjust their behavior, and even train them on custom data.

- Cost Efficiency: Once the setup is complete, there are no ongoing fees — no monthly subscriptions or usage-based costs.

- Offline Access: You can use your AI tools anytime, anywhere, even without an internet connection.

Running LLMs on your own machine doesn’t just give you an AI assistant — it gives you total freedom and control over how it works and how your data is handled.

The Advantages of Local LLMs Over Cloud-Based AI

The move toward running AI models locally is about more than just convenience — it’s about redefining AI ownership. Let’s take a deeper dive into why local LLMs are becoming the go-to choice for many users:

Your Data Stays Yours

Data privacy is one of the most pressing concerns in today’s digital age. By running LLMs locally, you ensure that every piece of information you input stays on your device. No external server processes your conversations, ideas, or confidential data, giving you complete ownership and peace of mind.Faster, Smoother Performance

Cloud-based AI tools often depend on network speeds and server availability. With local models, everything happens in real time on your hardware. This results in faster, more responsive interactions without any lag or buffering.Full Flexibility and Customisation

One of the biggest perks of local LLMs is the ability to tweak and train them however you like. You can feed them custom datasets, fine-tune their behavior, and shape their responses — creating an AI assistant tailored specifically to your needs.One-Time Investment, Long-Term Savings

Many cloud-based AI services require ongoing subscription fees or usage-based pricing. Local LLMs eliminate these costs — after the initial setup, you have powerful AI capabilities without any recurring expenses.Access Anytime, Anywhere

A local LLM works regardless of internet access. Whether you’re on a flight, in a remote area, or facing network issues, your AI stays accessible and fully functional.Enhanced Security

For businesses, researchers, and anyone working with sensitive information, keeping data in-house is essential. Local LLMs reduce the risk of leaks or breaches by ensuring your data never leaves your device.Creative Freedom for Developers

Local LLMs offer developers an open playground for experimentation. You can test different models, adjust parameters, and build custom applications without hitting usage limits or depending on external infrastructure.

Ready to embrace the freedom of local AI? The future is in your hands. 🚀

Best Free Local LLM Tools You Can Start Using Today

If you’re ready to take advantage of local LLMs, here’s a look at some of the best tools available — each offering unique strengths for different types of users:

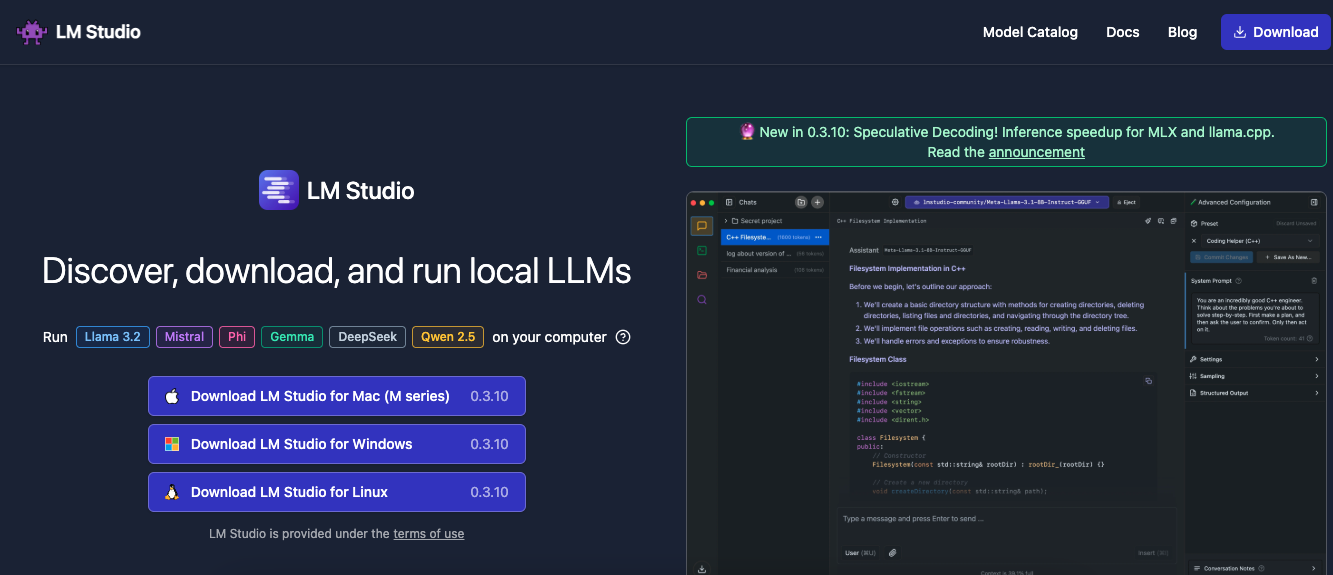

1.LM Studio

- Perfect For: Beginners and developers seeking an easy-to-use platform

- Works On: Windows, Mac, Linux

Why You’ll Love It:

LM Studio simplifies the process of running local language models. Its user-friendly interface makes working with popular models like LLaMA, Mistral, and Gemma effortless, even for those without technical expertise.

Developers will also appreciate the built-in local inference server, which makes building and testing AI-powered apps a breeze.

Top Features:

- Beginner-friendly design

- Cross-platform compatibility

- Support for leading AI models

- Local inference capabilities for app development

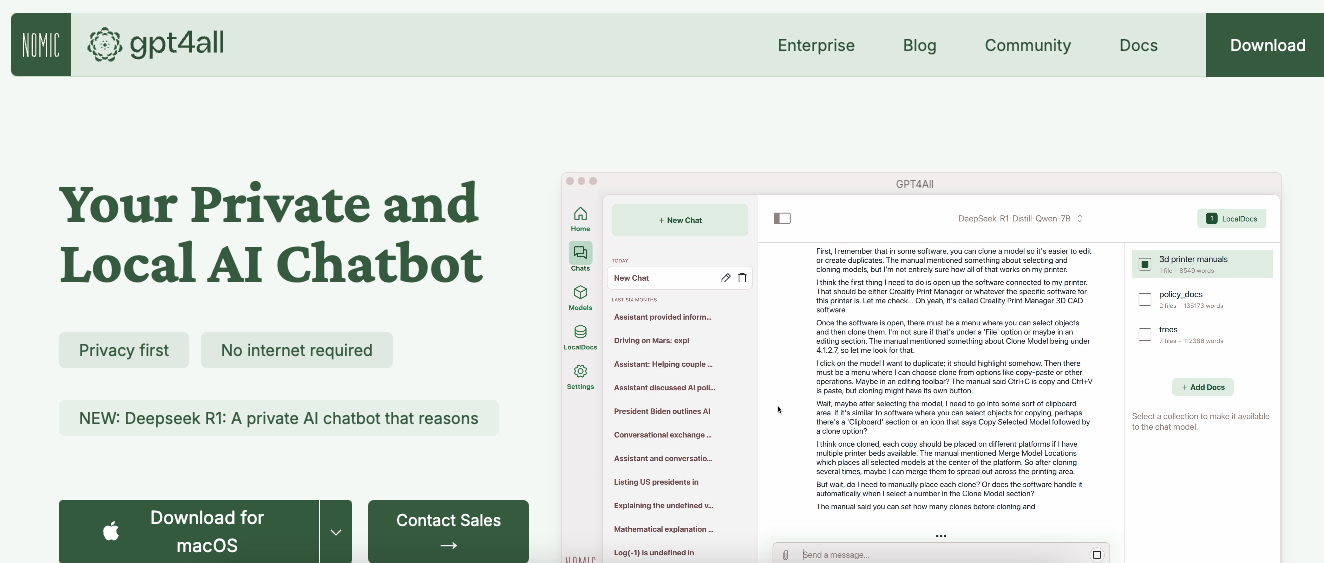

2. GPT4ALL

- Perfect For: Privacy-focused users

- Works On: Windows, Mac, Linux

Why You’ll Love It:

GPT4ALL prioritizes data security by running completely offline. Once set up, everything stays on your device — no external servers, no data sharing.

It also provides a rich library of open-source models, offering a variety of AI personalities and functions.

Top Features:

- Full offline operation

- Wide range of open-source models

- Intuitive chat-style interface

- Enterprise version available for advanced use cases

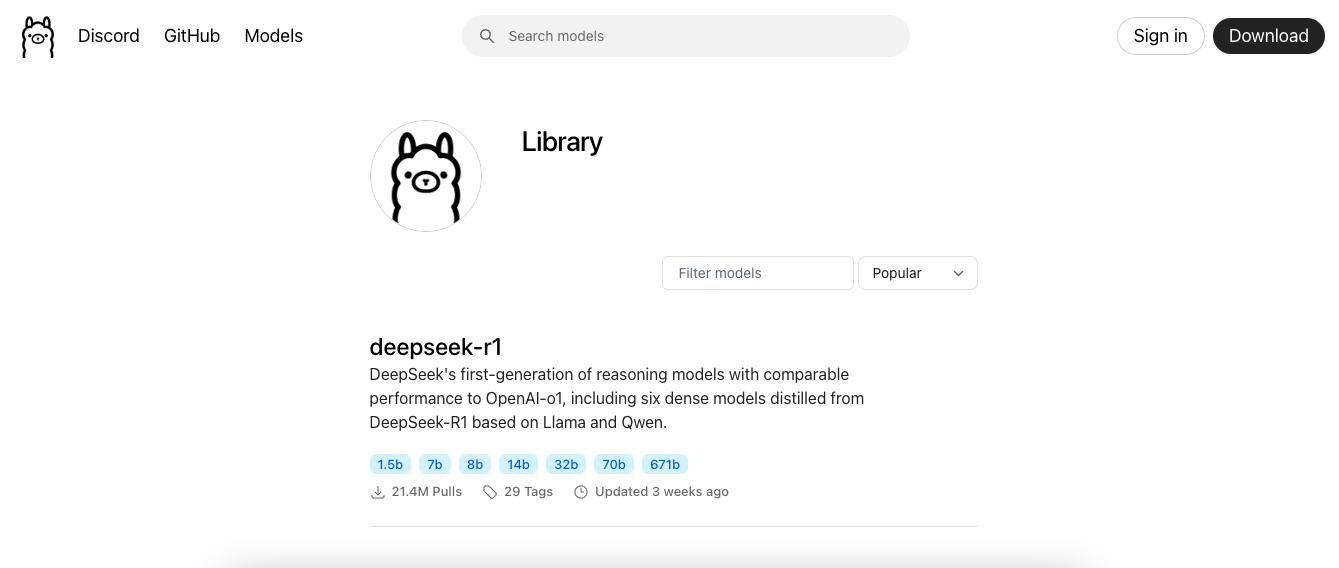

3. Ollama

- Perfect For: Command-line users and developers

- Works On: Mac (Windows and Linux support coming soon)

Why You’ll Love It:

If you prefer working through the command line, Ollama offers speed and efficiency. With just a few simple commands, you can download, deploy, and run AI models without the extra complexity.

Top Features:

- Fast deployment via CLI

- Minimal resource usage

- Powerful yet lightweight

- Ideal for custom AI projects

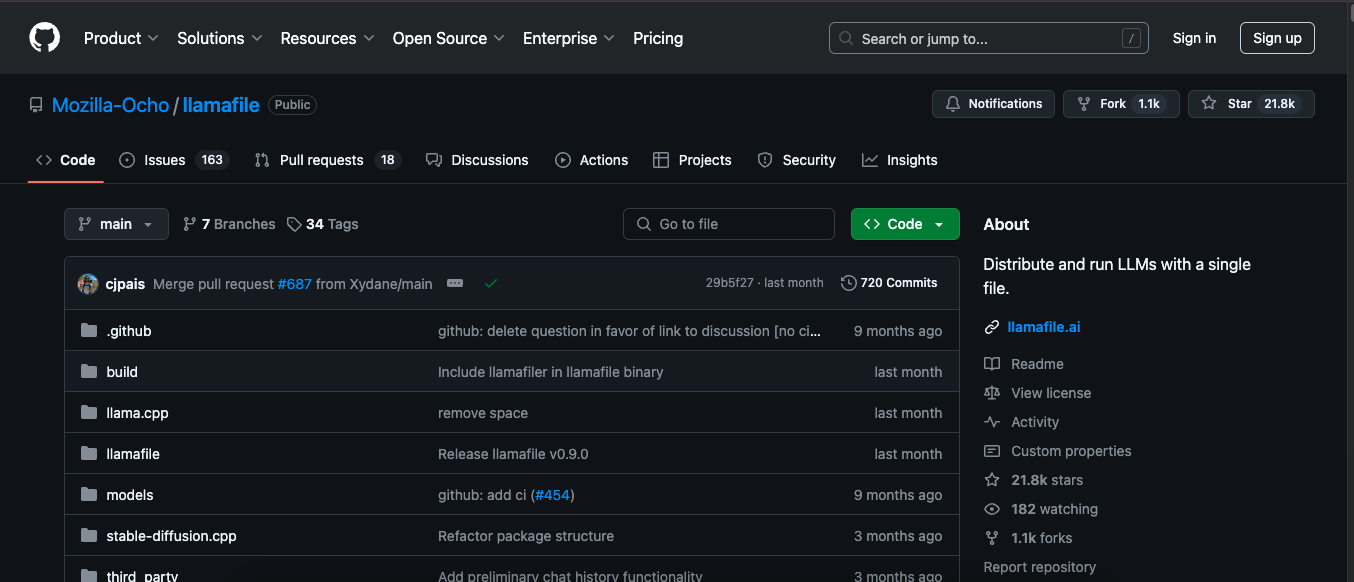

4. Llamafile

- Perfect For: Fast and portable deployment

- Works On: Windows, Mac, Linux

Why You’ll Love It:

Supported by Mozilla, Llamafile simplifies model sharing by packaging them into single executable files. This makes deployment across different devices as easy as opening a file.

Top Features:

- One-click execution

- Cross-platform support

- Optimised for efficient performance

- Great for prototyping and distribution

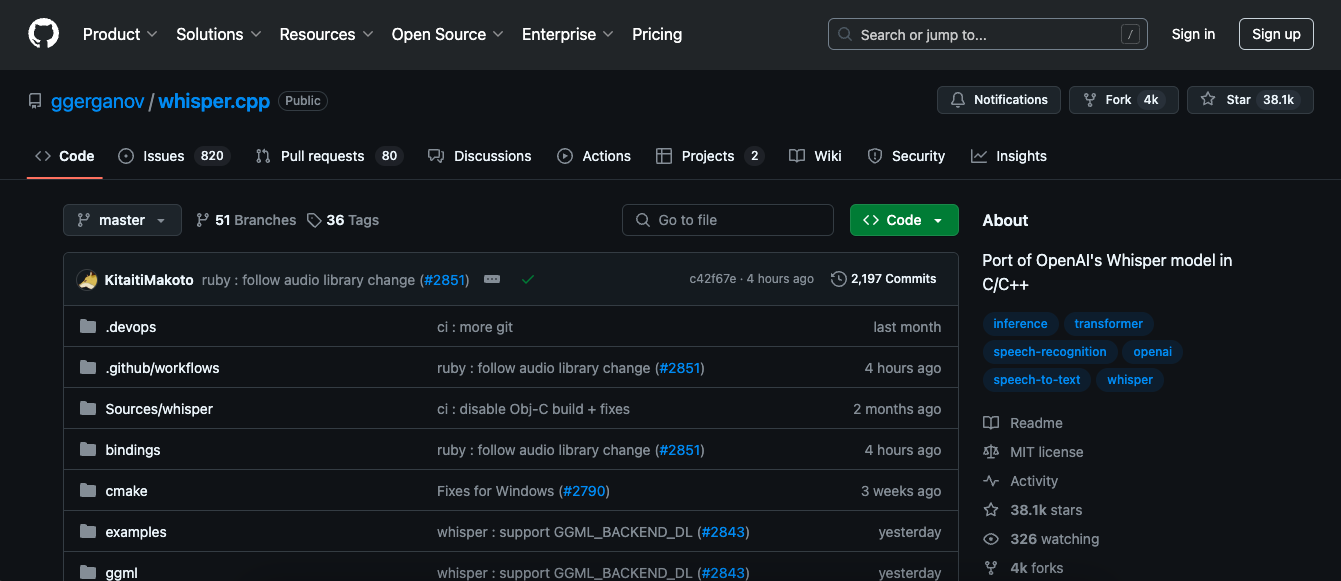

5. Whisper.cpp

- Perfect For: Offline transcription and speech recognition

- Works On: Windows, Mac, Linux

Why You’ll Love It:

Need accurate speech-to-text capabilities without an internet connection? Whisper.cpp delivers fast, high-accuracy transcription in multiple languages, all while keeping your audio data private.

Top Features:

- Precise audio-to-text conversion

- Multilingual support

- Speedy local processing

- Full offline functionality

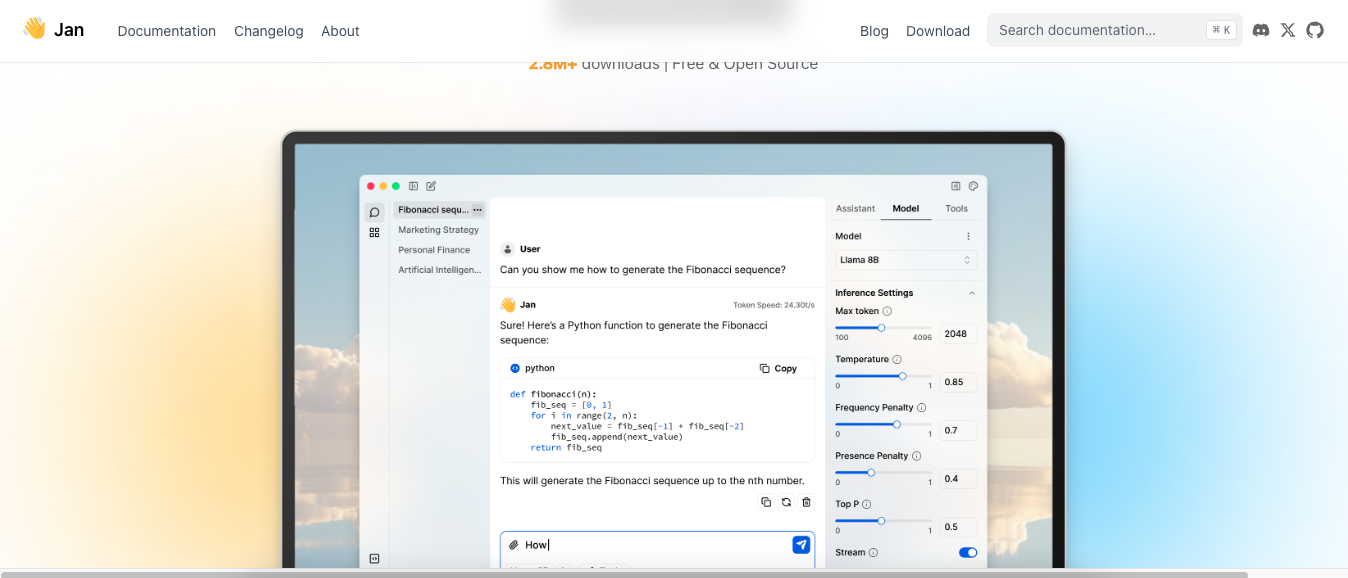

6. Jan

- Perfect For: Open-source enthusiasts and AI customisers

- Works On: Windows, Mac, Linux

Why You’ll Love It:

Jan offers unparalleled flexibility for developers. With support for multiple models and seamless integration with Hugging Face, it’s perfect for those who want to experiment and tailor their AI assistant.

Top Features:

- Open-source with active community support

- Integration with Hugging Face models

- Highly customisable and adaptable

- Great for building personalised AI solutions

How to Choose the Right Local LLM Tool

The best local LLM tool for you depends on your needs and technical comfort level. Here’s a quick guide:

- New to AI? Start with LM Studio for its simple, intuitive interface.

- Privacy is your top priority? GPT4ALL keeps everything offline and secure.

- Prefer command-line tools? Ollama offers fast, efficient CLI operations.

- Need quick and easy deployment? Llamafile’s one-click model execution is perfect.

- Working with audio transcription? Whisper.cpp excels in offline speech-to-text.

- Love open-source customisation? Jan gives you endless possibilities for model tweaking.

Whichever tool you choose, you’ll be taking a major step toward AI independence — harnessing powerful models while keeping full control over your data and experience.